Philipp Fischer is a founding partner of the Oberson Abels law firm. In this article, he and Ciarán Bryce look at some of the obligations on organizations put forward by the new European Union regulation on Artificial Intelligence.

Philipp Fischer is a founding partner of the Oberson Abels law firm. In this article, he and Ciarán Bryce look at some of the obligations on organizations put forward by the new European Union regulation on Artificial Intelligence.

1. Introduction

The European Union’s Artificial Intelligence Act entered into force on August 1st, 2024 and will become fully effective in two years time (August 2026). The overriding goal of the regulation is to protect the health, safety and fundamental rights of EU citizens from risks that IT systems using AI can pose. Since AI systems can be developed or deployed outside of the EU, the territorial scope of the legislation is effectively worldwide in the case of an AI system impacting EU residents in some manner.

The AI Act follows on the heels of the General Data Protection Regulation (GDPR) whose goal is to regulate the processing by organizations of citizens’ personal data. The GDPR has had a significant impact on all organizations because it led them to modify IT tools and business processes to comply with the regulation. In some cases, this was a costly and cumbersome transformation. The new AI Act can create worry in organizations that another costly adaptation process will have to be undertaken.

The goal of this article is to cite certain measures that organizations can take to comply with the AI Act. Our goal is not to provide a detailed summary of the Act. We believe that steps taken to become compliant with the GDPR are, at least in part, a sound basis for compliance with the new AI Act, which should be good news for organizations.

Table of Contents

2.1. Why Was an Act Considered Necessary?

3. Avoiding Regulation Overwhelm – GDPR and the Register of Processing

5.1. Processing Job Application CVs

5.2. Content Creation (marketing design, creating code with Copilot)

2. The AI Act in a Nutshell

It is worth reviewing why the Act was deemed necessary by the European Parliament. The Act responds to identified risks with AI, and organizations that use AI cannot plead ignorance of these risks.

2.1. Why Was an Act Considered Necessary?

Artificial intelligence refers to the ability of an IT system to reason or make decisions in a manner similar to the human brain. The term was coined by computer scientist John McCarthy in 1955, a very long time before the emergence of ChatGPT in 2022!

AI should arguably be called Advanced Algorithms, as the last decades have seen the design of algorithms that try to mirror human decision-making processes. One field of the domain is machine learning, where probability-based algorithms are developed by training them on observed real-world data. This approach has worked really well, and with the explosion of the Web in the last 30 years, the data available to train AI algorithms is huge. This data availability, coupled with a continued improvement in computing power which these algorithms require (often thanks to the Graphics Processing Units designed for gaming consoles!), has brought us to the situation today where AI processing is widely available and used.

A Google tutorial provides a good summary of the applications of AI today. These include:

- Natural Language Processing tasks, including translations, dictation, sentiment analysis, SPAM filtering, etc. Sentiment analysis is the evaluation of opinions and trends from text and other forms of content - something very useful to marketers, but which triggers significant data protection challenges.

- Computer vision, where AI interprets objects in images. This is used by self-driving cars and security surveillance systems.

- The design and operation of robots.

- Generative AI, of which ChatGPT, Dall-e and Github Copilot are among the most well-known tools, is a form of AI dedicated to the creation of textual, audio or video content. GenAI is used for tasks like AI-Pair programming (using AI to create program code) and the creation of marketing content.

- AI applications are found, and in fact have been found for a number of years already, in business and finance (e.g., analysis of market trends), health (disease diagnosis and personalized healthcare), and also in manufacturing, education and transportation. Clearly, humans have already delegated an enormous amount of decision-making to AI.

So what is it about AI that warrants an Act? Basically, AI has several significant risks:

- Explainable AI is a tenet that says whenever an AI system takes a decision, a human operator can always understand why the AI took that decision. In healthcare for instance, a doctor must be able to understand why an AI made a certain diagnosis. The challenge is that as AI systems get trained on increasing volumes of data, explainable AI cannot realistically be assured.

- AI has become very powerful for voice and video manipulation. AI needs less than a few seconds to impersonate someone’s voice, and deep-fakes and call-scams have become a serious problem. For example, financial institutions no longer rely on “callbacks” to clients to identify fraudulent client instructions.

- AI systems need a lot of data to train, including personal data. Privacy advocates believe that using personal data to train AI systems, without sufficient awareness by people (data subjects in GDPR parlance), could be a violation of the GDPR and other privacy regulations. Another risk is that content used in training might be done so in violation of copyright on that content.

- AI can make mistakes. In generative AI, the term hallucination denotes the possibility of misleading or incorrect content being generated. Further, AI algorithms learn from training data, and in the case where data comes from websites, the algorithms ultimately reflect the many biases and errors of these sites.

- Generative AI can facilitate the creation of malware because it lowers the entry barrier for malicious actors. It is also used to automate content generation for social media posts as part of influence campaigns.

- AI algorithms are computationally expensive, so the environmental impact of AI is high.

The EU wants to encourage innovation and AI adoption, but believes that this can only be done with measures in place to manage AI risk. Like for the GDPR, the AI Act aims to reinforce European values, including facilitating the exchange of goods, services and people within Europe. The AI Act helps achieve this by setting common standards for safe and trustworthy AI systems across the EU. Time will tell whether the AI Act becomes the blueprint for similar regulations elsewhere, as has been the case with GDPR since 2018.

The EU’s AI Act is not the only initiative designed to regulate AI. China and Australia have introduced laws. In the US, President Biden issued an executive order calling for safety standards for AI in public administrations, and seven top AI firms signed an open-letter in 2023 promising to work for safe and trustworthy AI.

2.2. What is in the AI Act?

Our goal here is to highlight salient features of the Act that can have an impact on organizations.

The key roles defined by the AI Act are providers and deployers.

- Providers are people or organizations that develop an AI system, or who put an AI system into service. For instance, Github is a provider for the Copilot AI-programming tool.

- Deployers are people or organizations that employ an AI system in the provision of some service, perhaps to clients of theirs. For instance, an organization using Github Copilot or a bank using an AI-powered chatbot to communicate with customers is a deployer.

In the following, we refer to a user as any person or organization that avails of AI-based services. A programmer working with an organization that has deployed Github Copilot is a user.

A common use-case scenario today is for an organization to choose and install a basic AI model, and to adapt this model for their own needs, for instance, by re-training or fine-tuning the model with organizational data, or by optimizing the internal parameters of the model. In certain cases, the organization may be a provider and deployer, with its clients and employees being users.

The obligations for organizations under the Act depend on their role. Providers must demonstrate the safety and trustworthiness of the AI tools they develop. The deployers are subject to a lower set of obligations, mostly related to transparency of AI use.

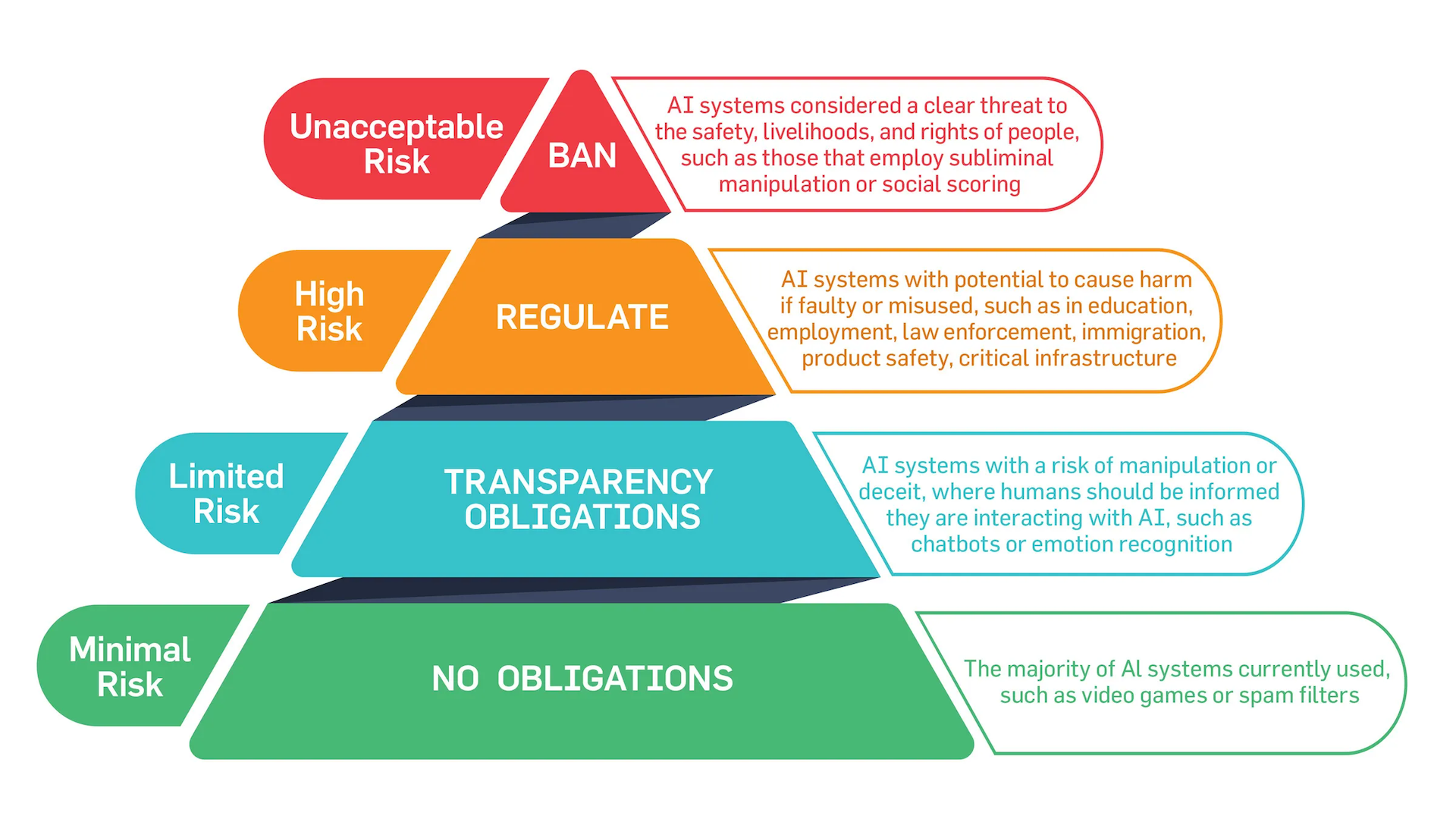

A fundamental requirement of the Act is that the risk of each AI system must be evaluated, and the obligations of deployers and providers depend on the result of the risk classification. As shown in the following figure, four levels of risk are defined by the Act. The Act also defines the use cases which fall within each category.

- Unacceptable risk. AI systems that facilitate exploitation, manipulation or social scoring fall into this category. Commonly cited scenarios include indiscriminate biometric identification of people, social scoring leading to later discrimination, emotion recognition software in the workplace, and any AI that detects and exploits a person’s vulnerabilities. AI systems in this category are simply banned.

- High-risk. AI systems in this category are those that can cause potential harm to citizens if they are used in an appropriate manner. The domain of use is important, with education, job application processing, immigration and border control, healthcare, democratic and judiciary processes being particularly surveyed. The obligations on the provider include registering the AI system in an EU database as well as implementing a range of governance procedures. We return to these procedures later on.

- Limited risk. These are systems where risks do not outweigh basic deception or trickery. Most chatbots, like ChatGPT, fall into this category. The main obligation for providers and deployers under the act is transparency - the requirement that users be made aware that the IT service they are interacting with employs AI.

- Minimal risk. These systems pose no direct risks, with commonly cited examples being SPAM filters and gaming applications. No specific requirements are mentioned for these systems.

Four levels of risk of AI systems are defined under the AI Act.

Four levels of risk of AI systems are defined under the AI Act.

Governance deals with procedures and tools that organizations put in place to facilitate IT management, and to ensure that IT usage complies with legal and company regulations. While the AI Act does not give much specifics, it does outline IT governance principles that organizations need to respect when implementing AI-based solutions.

For providers, the main principles are the following:

- A documented risk assessment of the AI system must be made, and the system classified in one of the four categories (unacceptable, high, limited or minimal).

- In the event of a high risk classification, the system must be registered in an EU database.

- Extensive documented testing and validation must be done on the AI system.

- The organization must instigate post-deployment monitoring of the system and do record-keeping of incidents.

For deployer organizations, the key principles are:

- A deployer should do a documented risk-assessment before deploying the AI-system and ensure that the tool is the most appropriate for the task at hand.

- There should be a training and awareness program for users of the system that explains risks.

- Incidents must be reported to the provider, thus helping the provider respect its monitoring obligations.

- There must be transparency for users. On the one hand, a user must be made aware that he or she is interacting with a system that employs AI. On the other hand, content created using AI should be tagged as AI-content.

Note the insistence on documentation for both deployers and providers. This is to facilitate compliance verification by auditors, and to help facilitate assessment of the whole supply chain.

The penalty format is roughly based on that of the GDPR. Depending on the size and revenue of the company, fines for AI Act violations can go up to 35 million EUR, or 7 percent of annual global turnover. While the law just came into effect, full compliance is required for 2026.

3. Avoiding Regulation Overwhelm – GDPR and the Register of Processing

The GDPR came into effect in 2018, and many organizations spent considerable resources adapting their organizational process to be compliant. It would be convenient if the effort made to implement GDPR could serve to help implement compliance with the AI Act. We believe that this is the case. The GDPR Act put forward two measures that are obligatory for large organizations, and highly recommended for others: the register of processing activities (ROP) and the data protection officer (DPO) role. These measures could serve as a basis for AI Act compliance.

In its simplest form, a register of processing activities is a record-kept log of all processing activities within the organization (e.g., payroll, CRM, Website management, etc.). Each activity’s description explains what the purpose of the activity is, which data is processed, what the legal basis for that processing is when personal data is involved, why processing is absolutely necessary, what tools are used for the processing, and how the processing is made secure. The advantage for organizations which have implemented the ROP are that they:

- Have an up-to-date account of all processing activities in their organization. This allows organizations to fulfill their duty of information towards EU residents, since the latter have the right to know precisely how their personal information is processed.

- Have identified the data used by each activity. A risk analysis will have been done for those activities that process personal data. This allows them to implement the required security measures, which need to be tailored to the level of risk.

The ROP does not have a fixed structure even though several organizations have proposed templates. Therefore, for deployer organizations, the ROP can be extended to include AI-related information like the justification for using AI for the activity, an AI related risk analysis, and a description of the measures to monitor the safe usage of the AI tool. Likewise, such an expanded ROP could also be used to meet the transparency requirements set forth in the AI Act at least with respect to the AI tools which rely on personal data (which, incidentally, is often done by integrating a specific AI-related section in the GDPR privacy statement, yet another situation where the data protection requirements and the AI-related regulatory requirement present similarities).

Under the GDPR, the DPO is an organizational role whose brief is to oversee the implementation of measures for GDPR conformity in an organization, from the ROP’s maintenance to employee awareness programs. The role involves challenging organizational management over plans to implement activities when there is a risk of GDPR non-compliance. The DPO has a broad view over all activities within the organization.

An equivalent role, let us call it AI Officer, can be attributed for the AI Act as the requirements are the same: ensuring a complete view of all AI-related projects, ensuring governance measures for activities using AI tools, challenging management and implementing awareness programs.

4. Implementation Topics

Once the AI officer has been appointed, he or she will work with other people in the organization, like the DPO, IT and IT security (though these might of course be the same people). The collaboration with the DPO is useful because, through justifications for the necessity and legality of each activity’s processing, the collaboration helps avoid the urge to over-digitalize processes. The collaboration with IT can help improve organizational data management. Many AI projects are known to fail because of poor data quality in organizations. For both AI officers and DPOs, verifying data provenance for services is crucial to ensure compliance so the AI Act, GDPR and data management projects are intricately linked.

One recommended tool much talked about recently is the Organizational AI Charter. The idea of a charter is to permit an organization to demonstrate its awareness of AI risks, and to mention the restrictions it imposes on itself to address these risks (for example, by defining the kinds of AI processing that are unacceptable to the organization). The charter is a means of communicating values to employees, clients and to the public at large. Such a Charter can also serve as a useful tool to instruct employees about responsible use of AI in their day-to-day activities.

Another measure to take is a Documented AI Policy. This should define the test procedures put in place to validate AI safety and to ensure the terms and conditions of AI services are clear and up to date. The policy should outline the awareness program for employees, explain the steps taken by the organization to ensure that promises made in the AI Charter are respected, and define an incident-disclosure process.

A number of AI test practices are common today. In red-teaming, a group of people from within or outside of the organization test the AI system until it breaks, in an attempt to detect problems. This is useful for both deployers and providers. In field-testing, the system is tested in the environment of the deployer. In A/B testing, two model versions, say a model and a fine-tuned version of the model, are compared for the quality of their outputs for a benchmark of input questions.

The community is organizing itself to help achieve trustworthy AI. For instance, a project hosted by the Linux Foundation for AI and Data proposes the Adversarial Robustness Toolkit - a series of open-source tools on Github for automated adversarial testing. Such tools are especially useful for providers when testing their AI models against the range of known cybersecurity attacks on AI systems.

As mentioned, the European AI Act is just one initiative that aims to enforce safe and trustworthy AI. In the US, the National Institute of Standards and Technology (NIST) has published guidelines for Trustworthy and Responsible AI following President Biden’s executive order. An updated document for Generative AI was published in July 2024. The document covers many of the points covered in this article, insisting much on documented procedures and structured feedback from users to developers. A strong point of the NIST standard is that it serves as a checklist of actions to undertake. The standard is useful to both providers and deployers.

5. Scenarios

Let us consider briefly three scenarios where an organization may (be tempted to) use AI.

5.1. Processing Job Application CVs

It is quite common today for companies to use software for automated processing of CVs, given the large number of on-line applications for positions. Job applicants are using AI also - notably to help write CVs and cover letters.

Some processing might appear not to be “intelligent”, e.g., the software might be a rule-based program that eliminates CVs based on the age of the candidate. Our advice is that there is no point trying to exploit such loopholes. It is best to treat all processing activities as activities that require a risk analysis.

In any case, processing CVs might be an instance of high-risk processing because people are being profiled. The software provider must register his software with the EU database when this integrates AI. The deployer organization must test and monitor the tool. Automated CV processing might be an area where one should avoid over-digitalization (activities that are not really needed). Justification for automated processing of CVs is generally weak.

5.2. Content Creation (marketing design, creating code with Copilot)

In such cases, a provider organization must ensure measures are taken to ensure training data and output do not violate IP license restrictions, or contain personal data whose owner did not consent to having this data used. This can typically be done by inserting filters to provide transparency on the level of risk that the output contains IP-protected content.

For marketing content, a risk assessment must be made though the risk level is most likely limited. If there is a risk of trickery, e.g., an image of a person’s face with smooth skin is generated to market anti-aging cream, then for transparency, the company must indicate that AI has been used.

5.3. Customer Chatbots

A risk analysis is needed before deploying a chatbot, because by definition, a chatbot’s role is to influence the customer, even if this relates to the choice of product to buy.

You should also verify the age of the customer, and make the customer aware that he or she is conversing with an AI. Finally testing and monitoring the chatbot is obligatory.

6. Conclusions

This article has looked at implications of the EU’s AI Act for organizations. Key take-aways are:

- Organizations should use their register of processing activities as a basis to identify activities using AI and to conduct their risk analysis. The GDPR offers a helpful start on the road to compliance with the AI Act.

- Documented testing procedures are obligatory for model providers; monitoring and reporting is obligatory for organizations deploying AI systems. Transparency is always required. AI Providers must assign a risk level to their systems. Depending on the risk level, the system might be banned or it may have to be registered in a database maintained by the EU.

- Organizations cannot plead ignorance in the event of violations of the AI Act. Any organization deploying an AI-based service should conduct a risk analysis required prior to its launch.

Authors: Philipp Fischer and Ciarán Bryce