Summary

The InfoQ website is publishing a series of articles on using generative AI models within an enterprise. One article gives adoption tips, notably about carefully measuring production parameters like available compute capacity and number of active users, as well as recommending that deployments be future-proof so that models can be replaced with minimum impact on clients and applications. Another of the articles reviews the different prompting methods currently popular.

Several articles have been published on security. WIRED reports that generative AI is increasingly used by criminals, for example, romance scammers are using real-time face replacement to masquerade as potential love interests. The EU is debating a controversial regulation on allowing authorities use AI to analyze messaging app exchanges for child sexual abuse material and evidence of grooming activities. Meanwhile, the chief of the Australian Intelligence Service says that AI is being used by terrorists to improve their recruitment campaigns on social media.

AI has been awarded two Nobel prizes. Geoffrey Hinton and John Hopfield received the prize in Physics for work on neural networks using principles from physics. Google DeepMind’s Demis Hassabis and John M. Jumper were co-recipients of the prize in chemistry for their work with AI to predict protein structures. It was not all good news for Google this week: the US department of Justice is proposing to split Android and Google Chrome from the company to break Google’s monopoly on search engine and on-line advertising.

Finally, Hugging Face has announced a new AI leaderboard for models in the FinTech domain. The benchmark evaluates models’ financial skills like extracting financial information from reports and predicting stock movements.

Table of Contents

1. Navigating LLM Deployment: Tips, Tricks, and Techniques

2. Gradio 5 is here: Hugging Face’s newest tool simplifies building AI-powered web apps

3. Maximizing the Utility of Large Language Models (LLMs) through Prompting

4. Google DeepMind leaders share Nobel Prize in chemistry for protein prediction AI

5. Introducing the Open FinLLM Leaderboard

6. 'Chat control': The EU's controversial CSAM-scanning legal proposal explained

7. Australia’s spy chief warns AI will accelerate online radicalization

8. Pig Butchering Scams Are Going High Tech

9. DOJ suggests splitting off Chrome and Android to break Google’s monopoly

1. Navigating LLM Deployment: Tips, Tricks, and Techniques

This InfoQ article from the cofounder of TitanML gives seven tips to those companies thinking about deploying a language model on-premises. The advantages of on-premise deployment include data privacy (since company data never leaves the company infrastructure), performance (especially when retrieval-augmented generation is linked to the model), and decreased costs in the long run. That said, self-hosting is a challenge because of the required computing resources. The author mentions that even a relatively small model, one with say seven billion parameters, still requires 14 GB of RAM and expensive GPUs. The seven tips are the following:

- Organizations should begin by being precise about production requirements. This includes clarifying whether they require real-time or batch latency for the model, the expected number of concurrent users, and the hardware available in the organization.

- The models deployed should always use quantization. This is a process to reduce memory and computing requirements by decreasing the bit width of model weights. For instance, this could involve reducing from 16-bit floating point to 8-bit integers.

- The organization should deploy GPU utilization strategies that optimize inference times in models. One approach is to batch inference processing. In dynamic batching, the team waits for a number of requests before treating them all, but this gives spiky performance. In continuous batching, multiple user requests are treated simultaneously by the model engine – in a manner analogous to an operating system that time-shares between a number of concurrently running applications. Parallelism is another technique to improve inference times. In this approach, processing is split over separate GPUs.

- The large computation requirements of AI models incentivize the centralization of all infrastructure resources.

- The deployment should be made future-proof. Since the lifetime of a model could be one year, until a more powerful model appears, organizations should ensure that the model can be migrated with minimum impact on clients and applications using the model.

- Though GPUs are expensive, they are still the best option for running generative AI models.

- Avoid large language models unless they are really needed. There is an increasing number of small models that are appropriate for many enterprise tasks.

2. Gradio 5 is here: Hugging Face’s newest tool simplifies building AI-powered web apps

Hugging Face has just launched Gradio 5 – a new version of an open source tool for creating machine learning Web applications. Gradio has over over 2 million monthly users and more than 470’000 applications built on the platform, and has been used in well-known solutions like Chatbot Arena, Open NotebookLM, and Stable Diffusion. The new release seeks to minimize the amount of Python (or JavaScript) code needed to create a model, and the AI Playground feature allows users to take any model available on Hugging Face and integrate it into their application. An independent security firm did a security audit of the platform and advised on the security measures that get integrated into Gradio 5 built applications. The platform will shortly include support for multipage apps, with native NAVs and sidebars, more media components and support for running applications on mobile devices.

3. Maximizing the Utility of Large Language Models (LLMs) through Prompting

This InfoQ article looks at several common prompt engineering techniques. Prompt engineering is the technique of phrasing prompts in a manner that yields better quality responses from the language model. The four prompt patterns reviewed are few-shot prompting, chain-of-thought prompting, self-consistency prompting, and tree-of-thought prompting. Prompt engineering is not (yet) a science, so experimentation and creativity are always encouraged in prompting.

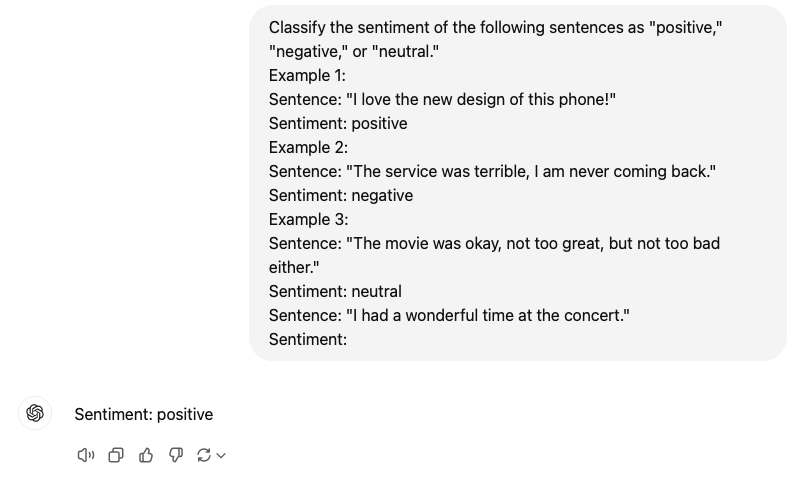

Few-Shot Prompting. In AI, zero-shot prompting is when a language a language model is asked a question without being given any prior instructions. However, response quality is nearly always improved when some examples of the expected output are given in the prompt. This is few-shot prompting, for which the following prompt is an example.

Example of Few-shot Prompting.

Example of Few-shot Prompting.

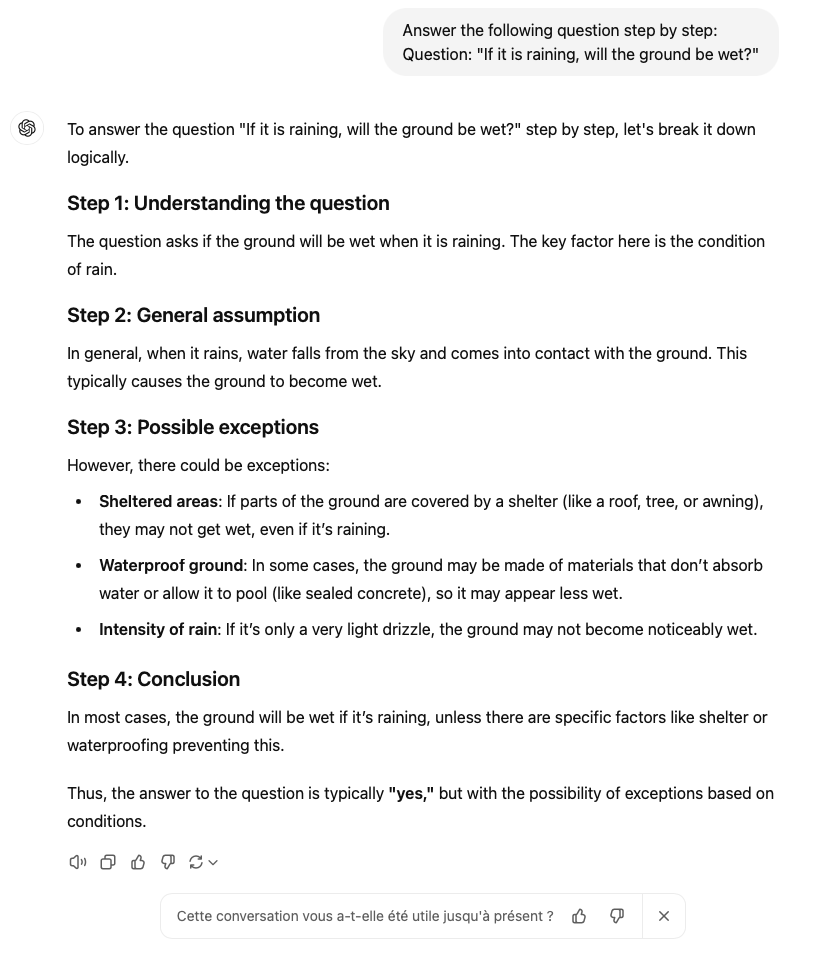

Chain-of-Thought Prompting. Few-shot prompting is not the best option for more complex reasoning tasks, notably for sequential multi-step reasoning tasks where it helps to break the problem down for the model. This is the idea behind chain of thought prompting, where the user provides information that helps the model treat the intermediate reasoning steps. Also, the user can more easily verify how the model came to a decision. A key approach is to ask the model to answer the query “step by step”. Here is an example:

Example of Chain of Thought Prompting.

Example of Chain of Thought Prompting.

Self-Consistency Prompting. In this prompting approach, the model is provided with diverse reasoning paths and then selects the most consistent answer among the generated responses. This approach is good for general knowledge questions and sentiment analysis task, but can fall short for exploration or strategic lookahead tasks. To do self-consistency prompting, the same prompt can be run multiple times, perhaps with different data sets if few-shot prompting is being used in combination, and then the user or model can be asked to compare all answers for the most common or reliable response.

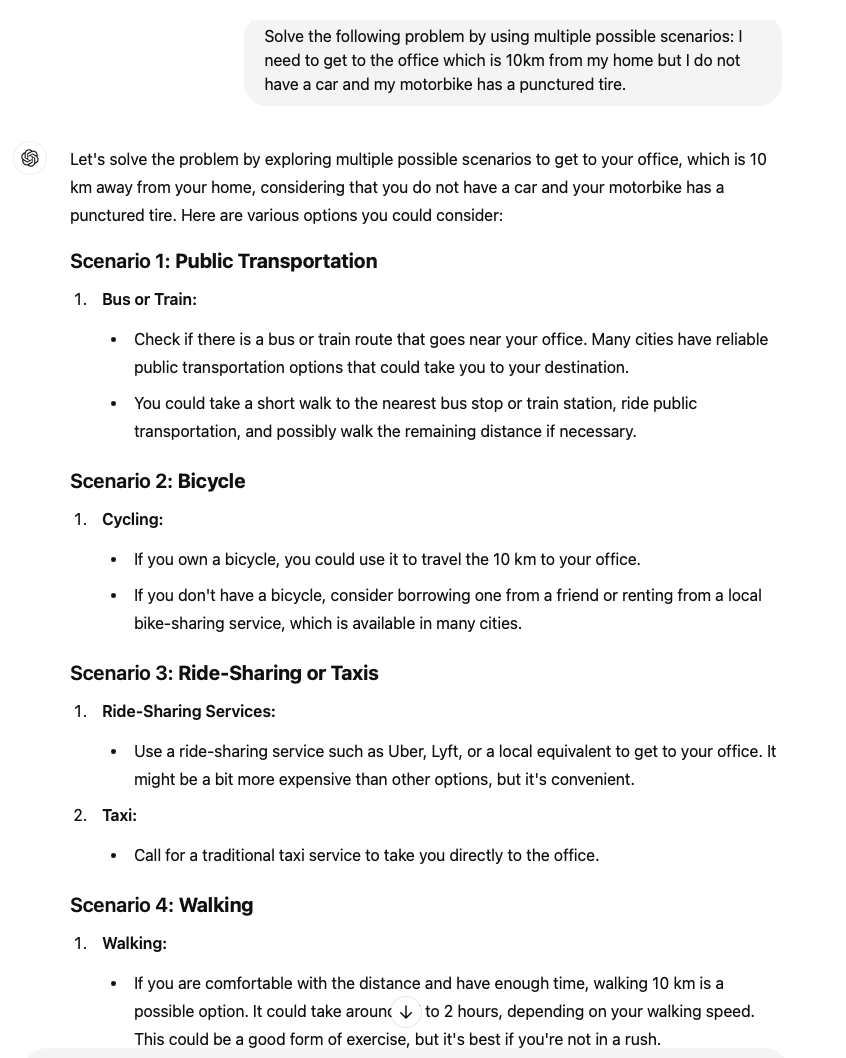

Tree-of-Thoughts Prompting. This prompting technique encourages the model to explore multiple possible solutions to a problem. The model can then be asked to refine its answers or to critique the solutions generated with information provided in a second prompt. The approach is good for the following type of problem: “Solve the following problem by using multiple possible scenarios: I need to get to the office which is 10km from my home but I do not have a car and my motorbike has a punctured tire.”

Example of Tree of Thought Prompting.

Example of Tree of Thought Prompting.

4. Google DeepMind leaders share Nobel Prize in chemistry for protein prediction AI

The AI field has won Nobel prizes in chemistry and physics. In chemistry, the prize from the Royal Swedish Academy of Sciences was attributed to Google DeepMind CEO Demis Hassabis and John M. Jumper, who also works at DeepMind, for their work on using AI to predict protein structures. (The prize was also given to David Baker, a biochemistry professor at the University of Washington for work on computational protein design). Understanding a protein structure has always been a complex and long task, but the use of AI is offering new possibilities. Hassabis and Jumper used their AlphaFold AI to predict the structure of a protein from a sequence of amino acids in 2020. Since then, the tool has predicted the shapes of all currently known proteins. The ability to predict protein structures is critical to the development of efficient drugs, vaccines and even cures for cancer. DeepMind has released its latest model, AlphaFold 3, as open-source.

Geoffrey Hinton, one of the founding fathers of deep learning in the 1980s is a co-recipient of the 2024 Nobel Prize in physics with fellow computer scientist John Hopfield. The scientists developed neural network techniques by borrowing methods from physics. In recent years, Hinton, who also won the ACM Turing Award in 2018, and has been vocal in pointing to existential risks with AI, that include genocidal robots and economic collapse. He hopes that the Nobel prize will help bring more coverage of his arguments.

5. Introducing the Open FinLLM Leaderboard

Hugging Face has announced the Open FinLLM Leaderboard for AI models to evaluate financial skills like extracting financial information from reports and predicting stock movements. Existing AI model leaderboards focus on general NLP tasks like translation or summarization, but do not address industry specifics. The tasks evaluated by the leaderboard’s methodology include information extraction (obtaining structured insights from unstructured documents like regulatory filings, contracts, and earnings reports), textual analysis (for sentiment analysis, news classification, and Hawkish-Dovish classification – financial measure of policy control on market – which all help assess market sentiment), question and answering, as well as market forecasting based on news, historical data, sentiment and market movements. The metrics used to evaluate models include accuracy using techniques like RMSE (Root-mean-square-error) as well as financial metrics like the Sharpe ratio (which measures return on investment against risk) which is used to test the model’s investment decision strength. GPT-4 is currently top of the leaderboard.

6. 'Chat control': The EU's controversial CSAM-scanning legal proposal explained

This TechCrunch article reports on a proposed EU regulation to combat child sexual abuse which could allow authorities to scan messaging apps for child sexual abuse material (CSAM) and for evidence of grooming activities. The regulation would go against current EU privacy rules, like the ePrivacy regulation. In addition, opponents warn that using AI to scan for CSAM would lead to a large number of false positives, and that such techno-solutions are not the most effective ways to combat child sexual abuse. The regulation would also force messenger apps to downgrade their level of end-to-end encryption. The Signal Messenger owners have said they would rather leave the EU than downgrade security. Proponents of the law argue that only scanning of people suspected of CSAM activity would take place, and then only when a court order has been issued. Another possibility is to ask for user consent to scanning, where people who refuse to give consent have access to fewer messaging features such as image or URL exchange.

7. Australia’s spy chief warns AI will accelerate online radicalization

This article from the Guardian reports on a talk by the head of the Australian Service Intelligence Agency where he warned that social media is making it easier for people to get radicalized. He called social media “the world’s most potent incubator of extremism”, as it can take days or weeks to radicalize an individual, compared to months or years in previous generations. He cited the case of the Christchurch terrorist (a white supremacist who murdered 51 people in two mosques in 2019) who was radicalized via social media. The Intelligence chief specifically mentioned the Telegram App which has a chatroom called Terrorgram which is being used by individuals in Australia to communicate with terrorists abroad in order to plan terrorist attacks and to “provoke a race war”. He said that AI is being used by terrorists to improve their recruitment campaigns.

8. Pig Butchering Scams Are Going High Tech

This article reports on a UN report that highlights how scammers in Southeast Asia are increasingly using AI in their pig butchering scams. In a pig butchering scam, the criminal builds an intimate relationship with the victim before asking for money, usually for an “investment opportunity”. An example use of AI is to overcome the language and dialect barriers that criminals have faced. Another is the creation of more convincing web-sites with job offers that scam potential job-seeking candidates, as well as fake commercial websites that contain crypto-draining malware. In this attack, the malware downloaded to the victim’s computer redirects crypto-currency payments to the criminals’ accounts. Another example cited from Western Africa are romance scammers that use real-time face replacement to masquerade as potential love interests. The UN suggests that criminals have already made 75 billion USD from pig butchering attacks. The money earned is enabling criminals to diversify their activities and to invest in more sophisticated technologies.

9. DOJ suggests splitting off Chrome and Android to break Google’s monopoly

The US Department of Justice (DOJ) is proposing to address Google’s “illegal monopoly” in the online search and advertising space by splitting off the Chrome browser and Android operating system from the company. For the DOJ, Chrome browser’s default use of Google Search “significantly narrows the available channels of distribution and thus disincentivizes the emergence of new competition”. The DOJ said it seeks to “limit or end Google’s use of contracts, monopoly profits, and other tools to control or influence longstanding and emerging distribution channels and search-related products”. Android runs on 70% of the world’s smartphones and is the motor of Google’s advertising model with, the article says, 3 billion users worldwide generating trillions of search queries each year. The DOJ is also investigating agreements between Google and device makers that have led to Google being the default search engine on those devices. It is also looking at Google’s ability to collect vast amounts of user data, giving it a huge advantage over competitors for targeted advertising. Google might be obliged to share data with these competitors to level the playing field. In response, Google has argued that the high technical integration between Chrome and Android has allowed it to implement stronger security, and this could be jeopardized by any split.