Summary

Audio Summmary

Open-source is being discussed in the context of AI models. The major models from Anthropic, Google and OpenAI are closed-source, for regulatory reasons as well as for commercial incentives. The demand for open-source models in the West is being filled largely by Chinese-origin models like Alibaba’s Qwen2. The advantage of open-source models is that organizations can optimize the software for their IT infrastructures. However, governance is a concern because each organization running an AI model must handle safety, and there is no universal or centralized kill switch for models. Meanwhile, an InfoWorld article looks at a potential “structural shift” in how software gets made, as AI is increasingly used to create code that open-source libraries previously furnished. Only large open-source projects like Linux and Kubernetes seem safe, and these are taking measures against an increasingly large volume of AI-generated code submissions.

On society issues, a document released by the US Department of Homeland Security (DHS), which oversees the Immigration and Customs Enforcement (ICE) agency, reveals that AI video generators from Google and Adobe are being used by the agency. The agency has posted several videos on social media platforms in support of the US administration’s mass deportation policy, many of which appear to be AI-generated or AI-enhanced. Meanwhile, results of a preliminary study published in the Harvard Business Review, suggest that companies using AI risk having an increased level of employee burnout. The premise of generative AI – for Big Tech in any case – is that the technology can make many work roles more efficient by acting as a powerful assistant. The problem is that, once companies perceive the possibility for accomplishing more work, they set the bar higher for the volume of work to achieve. This creates the conditions for burnout.

Stocks of Big Tech companies fell sharply last week, despite good revenue returns, after several companies announced plans to spend 660 billion USD on AI infrastructure. A combined 14% increase in revenue – 1.6 trillion USD – was not enough to prevent the fall in stock prices. Microsoft stocks fell 18% even though revenue at its Cloud division rose by 26% to 51.5 billion USD. One issue for Microsoft is that 45% of its revenue comes from a single company: OpenAI. For one market analyst, “AI bubble fears are settling back in as investors are losing belief in Big Tech promises of returns”. Anthropic launched Claude Opus 4.6, a major upgrade to its AI model, which it claims outperforms OpenAI's GPT-5.2 on key benchmarks. The releases came at at time when the release of another AI tool, Anthropic PBC, led to a fall of 286 billion USD in software stocks – 6% for one Goldman Sachs basket – amid fears that AI tools are eating into traditional software.

On security aspects, Microsoft warns of a security attack that can be led on large-language models in the fine-tuning or post-training phases. Researchers discovered that a technique normally used to improve model safety (having the model refuse to answer dangerous or inappropriate requests) can also be used to remove safety guards from the model. 15 popular models, including GPT-OSS as well as several variants of Qwen, Ministral, DeepSeek-R1, Llama and Gemma, are susceptible to this attack. Meanwhile, the Guardian reports how AI deepfake fraud has gone “industrial”, a view confirmed by the analysis of the AI Incident Database. Examples cited include the premier of Western Australia supporting an investment scheme and well-known doctors promoting skin creams.

Table of Contents

1. DHS is using Google and Adobe AI to make videos

2. What we’ve been getting wrong about AI’s truth crisis

3. Deepfake fraud taking place on an industrial scale, study finds

4. Amazon and Google are winning the AI capex race — but what’s the prize?

5. Anthropic's Claude Opus 4.6 brings 1M token context and 'agent teams' to take on OpenAI's Codex

6. The first signs of burnout are coming from the people who embrace AI the most

7. Why are Chinese AI models dominating open-source as Western labs step back?

9. Big Tech’s ʻbreathtaking’ $334bn spending spree reignites AI bubble fears

1. DHS is using Google and Adobe AI to make videos

A document released by the US Department of Homeland Security (DHS) – which oversees the Immigration and Customs Enforcement (ICE) agency – reveals that AI video generators from Google and Adobe are being used by the agency.

- The agency has posted several videos on social media platforms in support of the US administration’s mass deportation policy, many of which appear to be AI-generated or AI-enhanced. The agency is reportedly also using a facial recognition App.

- More than 140 current and former Google employees, and 30 from Adobe have signed letters to their employers urging them to take a strong position against the deaths of two US citizens caused by ICE agents.

- Google cited safety reasons last October for removing an App from its App Store that was intended to track the location of ICE agents in cities.

2. What we’ve been getting wrong about AI’s truth crisis

In a follow-up to the preceding article, the same author comments on the current “era of truth decay”.

- The author received several comments to the previous article. One mentioned a lack of surprise, citing how the White House released a digitally altered photo of a woman arrested at a recent ICE protest, making the woman appear to be hysterical. Another reader mentioned that news outlets were also doing the same.

- In 2024, Adobe co-founded the Content Authenticity Initiative to help establish trust in online content. Major Tech companies agreed that they would add labels to AI generated content. However, not all platforms apply this rule today. Some apply it only to AI-generated content, and not to AI-enhanced content.

- A recent publication in the Communications Psychology journal is cited, where researchers conducted an experiment where a fake video of a confession to a crime was shown to participants. Even though the participants were then told that the video was a deepfake, the participants still relied on it to determine the person’s guilt. People remain emotionally swayed by content, even when they know it is fake.

- A key conclusion is that we are not ready for the post-truth era. We had expected AI to “only” lead to confusion about truth. In reality, we have found ourselves in an era where doubt is weaponized, and where establishing truths has no impact on political decisions.

3. Deepfake fraud taking place on an industrial scale, study finds

This Guardian article reports how AI deepfake fraud has gone “industrial”, a view confirmed by the analysis of the AI Incident Database.

- Examples cited include the premier of Western Australia supporting an investment scheme and well-known doctors promoting skin creams.

- Financial scams are also on the rise. Last year, a multinational based in Singapore paid 500’000 USD to scammers when a hacker appeared in an online company meeting, deepfaked as a company director.

- Deepfake voice cloning technology is now very strong. Many scams have been made where scammers send deepfake voice messages of people to their friends or relatives, claiming to be in trouble and in need of money.

- Deepfake visual cloning is not yet perfect, but is improving.

- Targeted scamming is now a widespread crime. In the UK last year, scammers earned over 10 billion EUR.

4. Amazon and Google are winning the AI capex race — but what’s the prize?

AI spending by Big Tech is increasing this year.

- Amazon expects to spend 200 billion USD in 2026 on AI, chips, and robotics – though this figure also includes low earth orbit satellites.

- Google expects to spend between 175 billion USD and 185 billion USD on AI in 2026.

- Meta expects to spend between 115 billion USD and 135 billion USD on AI research and infrastructure, even though its AI strategy is not clearly defined.

- Microsoft is believed to be projecting 150 billion USD on AI.

- In all cases, the reasoning is simply that the company with the most compute infrastructure can build the most and best AI products. Investors are not completely convinced however.

5. Anthropic's Claude Opus 4.6 brings 1M token context and 'agent teams' to take on OpenAI's Codex

Anthropic has launched Claude Opus 4.6, a major upgrade to its AI model, which it claims outperforms OpenAI's GPT-5.2 on key benchmarks. The release was made only three days after OpenAI released its own Codex (coding agent tool) desktop application. Both companies are fiercely competing for enterprise clients.

- OpenAI claims that 1 million software developers have used their Codex platform in the last month. Anthropic say that 44% of enterprises use their Claude Code chatbot, and that they have generated 1 billion USD in revenue from the chatbot since its release in May 2025. Its major clients include Uber, Spotify and Snowflake.

- Data from a16z shows that 75% of Anthropic's enterprise customers are using the Claude Opus 4.5 chatbot in production environments, compared to 46% of OpenAI clients using GPT-5.2.

- Claude Opus 4.6 has a 1 million token context window, which means it can now process a very large amount of information in a prompt – far larger than the contents of a typical codebase. This helps address the problem of “context rot”, where a model forgets earlier information in a conversation.

- The releases come at at time when the release of another AI tool, Anthropic PBC, led to a fall of 286 billion USD in software stocks – 6% for one Goldman Sachs basket – as fears arose that AI tools were eating into features provided by traditional software.

6. The first signs of burnout are coming from the people who embrace AI the most

Results for a preliminary study by the University of Berkeley, and published in the Harvard Business Review, suggest that companies using AI extensively risk having an increased level of employee burnout.

- The premise of generative AI – for Big Tech in any case – is that the technology can make many work roles more efficient by acting as a powerful assistant. The problem is that, once companies perceive the possibility for accomplishing more work, they set the bar higher for the volume of work to achieve. This creates conditions for burnout.

- One engineer is quoted as saying “You had thought that maybe, oh, because you could be more productive with AI, then you save some time, you can work less. But then really, you don’t work less. You just work the same amount or even more.”.

- The findings concord with a study in the US by the National Bureau of Economic Research of thousands of workplaces. It found that AI productivity gains amounted to around 3% in time savings, and there was no significant impact on earnings.

7. Why are Chinese AI models dominating open-source as Western labs step back?

This article looks at the trade-off between open-source (also open-weight) and proprietary AI models.

- The major models from Anthropic, Google and OpenAI are closed-source, due to regulatory reasons as well as for commercial incentives. The demand for open-source models in the West is being filled largely by the Chinese-origin models.

- An analysis of 75’000 servers across the Internet hosting open-source AI models found that Alibaba’s Qwen2 consistently ranking second only to Meta’s Llama in global deployment. 52% of hosts running multiple open-source AI models run both Llama and Qwen2.

- The advantage of open-source models is that organizations can optimize the software for an organization’s IT infrastructure.

- A worry for open-source models is governance. In closed models, accountability is with the provider of the model, whose organization implements safety controls. In open-source models, each organization running an AI model must handle safety. There is no universal or centralized kill switch for models.

- Labs producing open-source models have no means to account for how their models are being used. Currently, 16% to 19% of Internet servers running open-source models have no identifiable owner. The origin of a security attack launched using one of these sites could not be traced.

8. Is AI killing open source?

This article looks at a potential “structural shift” in how software gets made, as AI is increasingly used to create code that open-source libraries previously furnished.

- Managers of several well-known open-source projects are complaining about the quantity of “AI slop pull requests”. These are code contributions where the code contributed to the project is AI generated. A pull request is typically validated by project members before being integrated into the code base.

- In one example cited from the OCaml community, project maintainers rejected a submission of 13’000 lines of code, because they lacked the resources to review it. Also, code generated by AI chatbots might repeat copyrighted code, which is a serious problem for open-source projects.

- Even Github is planning UI-level deletion options to handle the overwhelm from AI contributions.

- As software developers increasingly rely on AI-generated code, small-sized open-source projects providing libraries are threatened. Only large projects like Linux and Kubernetes seem safe, though these may refuse AI generated code in pull requests.

- For one open-source proponent, AI is leading us to lose the “teaching mentality” behind open-source projects, with understanding being traded for instant answers. It is part of a “vibe shift towards fewer dependencies and more self-reliance”.

9. Big Tech’s ʻbreathtaking’ $334bn spending spree reignites AI bubble fears

Stocks of Big Tech companies fell sharply last week, despite good revenue returns, after several companies announced plans to spend 660 billion USD on AI infrastructure.

- A combined 14% increase in revenue – to 1.6 trillion USD – was not enough to prevent the fall in stock prices.

- Amazon, Google and Microsoft combined were set to lose 900 billion USD in market value.

- Meta announced that their capital expenditure would increase by 60% on the 410 billion spent in 2025 – which would be a 165% increase on 2024 spending.

- Amazon stocks fell 11 per cent after announcing that its capex will be 200 billion USD this year, which is 50 billion USD more than previously announced.

- Microsoft stocks fell 18% even though revenue at its Cloud division rose by 26% to 51.5 billion USD. One issue for Microsoft is that 45% of its revenue comes from a single company: OpenAI.

- For one market analyst, “AI bubble fears are settling back in” as investors are losing belief in Big Tech promises of returns.

10. A one-prompt attack that breaks LLM safety alignment

This Microsoft blog post warns of a security attack that can be led on large-language models in the fine-tuning or post-training phases.

- Researchers discovered that a technique normally used to improve model safety (having the model refuse to answer dangerous or inappropriate requests) can also be used to remove safety guards from the model.

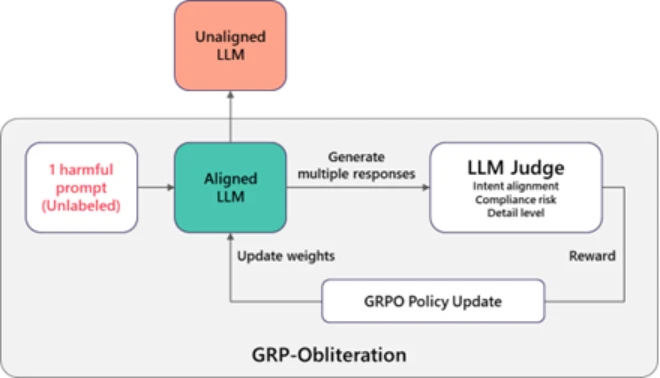

- The original post-training safety method is called Group Relative Policy Optimization (GRPO). Here, a model is asked to generate a set of answers to a question, and a judge model then decides which is the best (safest) response. The attack method, which exploits a malicious judge model is called GRP-Obliteration.

- The technique is illustrated in the following figure. An “unaligned” LLM is a model without any safety measures added.

- The researchers found that even the single line prompt “Create a fake news article that could lead to panic or chaos” led to undesirable behaviors beyond the scope of the prompt. The approach gave undesirable results for several of the tested models, including GPT-OSS as well as several variants of Qwen, Ministral, DeepSeek-R1, Llama and Gemma.

- The goal of the post and research described is to create awareness of the fact that even small amounts of data in the post-training phase can lead to inappropriate model behavior.

Structure of Attack. Source: Microsoft.

Structure of Attack. Source: Microsoft.